-By Google Research

{jesseengel,hanoih,gcj,adarob}@google.com

Most generative models of audio directly generate samples in one of two domains: time or frequency. While sufficient to express any signal, these representations are inefficient, as they do not utilize existing knowledge of how sound is generated and perceived. A third approach (vocoders/synthesizers) successfully incorporates strong domain knowledge of signal processing and perception, but has been less actively researched due to limited expressivity and difficulty integrating with modern auto-differentiation-based machine learning methods. In this paper, we introduce the Differentiable Digital Signal Processing (DDSP) library, which enables direct integration of classic signal processing elements with deep learning methods. Focusing on audio synthesis, we achieve high-fidelity generation without the need for large autoregressive models or adversarial losses, demonstrating that DDSP enables utilizing strong inductive biases without losing the expressive power of neural networks. Further, we show that combining interpretable modules permits manipulation of each separate model component, with applications such as independent control of pitch and loudness, realistic extrapolation to pitches not seen during training, blind dereverberation of room acoustics, transfer of extracted room acoustics to new environments, and transformation of timbre between disparate sources. In short, DDSP enables an interpretable and modular approach to generative modeling, without sacrificing the benefits of deep learning. The library is publicly available1 and we welcome further contributions from the community and domain experts.

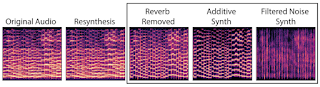

Differentiable Digital Signal Procressing (DDSP) enables direct integration of classic signal processing elements with end-to-end learning, utilizing strong inductive biases without sacrificing the expressive power of neural networks. This approach enables high-fidelity audio synthesis without the need for large autoregressive models or adversarial losses, and permits interpretable manipulation of each separate model component. In all figures below, linear-frequency log-magnitude spectrograms are used to visualize the audio, which is synthesized with a sample rate of 16kHz.

Differentiable Additive Synthesizer

The additive synthesizer generates audio as a sum of sinusoids at harmonic (integer) multiples of the fundamental frequency. A neural network feeds the synthesizer parameters (fundamental frequency, amplitude, harmonic distribution). Harmonics follow the frequency contours of the fundamental, loudness is controlled by the amplitude envelope, and spectral structure is determined by the the harmonic distribution.

Modular Decomposition of Audio

DDSP decoder was trained on a datset of violin performances, resynthesizing audio from loudness and fundamental frequency extracted from the original audio. First, we note that resynthesis is realistic and perceptually very similar to the original audio. The decoder feeds into an additve synthesizer and filtered noise synthesizer, whose outputs are summed before running through a reverb module. Since each the model is composed of interpretable modules, we can examine that audio output from each module to find a modular decomposition of the original audio signals.

Timbre Transfer

Timbre transfer from singing voice to violin. F0 and loudness features are extracted from the voice and resynthesized with a DDSP decoder trained on solo violin. To better match the conditioning features, we first shift the fundamental frequency of the singing up by two octaves to fit a violin’s typical register. The resulting audio captures many subtleties of the singing with the timbre and room acoustics of the violin dataset. Note the interesting “breathing” artifacts in the silence corresponding to unvoiced syllables from the singing

Extrapolation

Besides feeding the decoder, the fundamental frequency directly controls the additive synthesizer and has structural meaning outside the context of any given dataset. Beyond interpolating between datapoints, this inductive bias enables the model to extrapolate to new conditions not seen during training. Here, we shift resynthesize audio from the solo violin model after transposing the fundamental frequency down an octave and outside the range of the training data. The audio remains coherent and resembles a related instrument such as a cello.

Dereverberation and Acoustic Transfer

As seen above, a benefit of our modular approach to generative modeling is that it becomes possible to completely separate the source audio from the effect of the room. Bypassing the reverb module during resynthesis results in completely dereverberated audio, similar to recording in an anechoic chamber. We can also apply the learned reverb model to new audio, in this case singing, and effectively transfer the acoustic environment of the solo violin recordings.

Phase Invariance

The maximum likelihood loss of autoregressive waveform models is imperfect because a waveform's shape does not perfectly correspond to perception. For example, the three waveforms below sound identical (a relative phase offset of the harmonics) but would present different losses to an autoregressive model.

While the original models were not optimized for size, initial experiments are promising in scaling down for realtime aplications. The "tiny" model below is a single 256 unit GRU trained on the solo violin dataset and performing timbre transfer.

Reconstruction Comparisons

The DDSP Autoencoder model uses a CREPE model to extract fundamental frequency. The supervised variant uses pretrained weights that are fixed during training, while the unsupervised variant learns the weights jointly with the rest of the network. The unsupervised model learns to generate the correct frequency, but with less timbral accuracy due to the increased difficulty of the task.

Comments